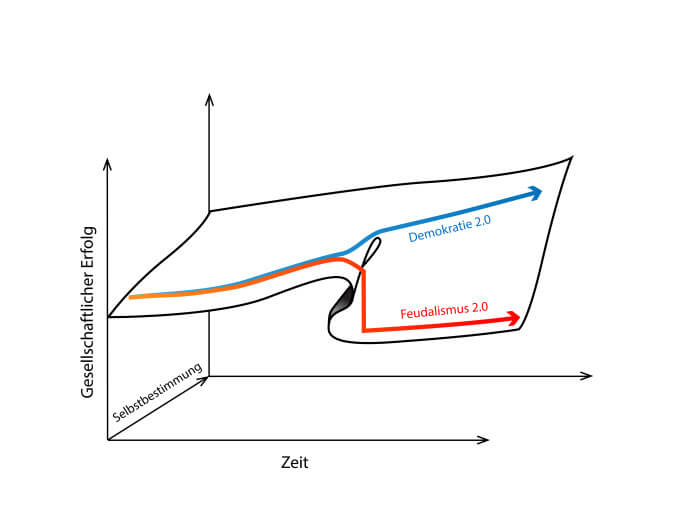

Sofia, November 20 (Nikolay Velev of BTA) – Around the world, we are seeing the rise of different forms of technological totalitarianism but the future digital democracies will be able to combine the best of all systems: competition from capitalism, collective intelligence from democracies, trial and error, and the promotion of superior solutions, and intelligent design (AI), Dirk Helbing, a professor of computational social science, s

Schlagwort: Dirk Helbing

PLANETARY-SCALE THREATS BY THE DIGITAL REVOLUTION by Dirk Helbing

Monday, 3 August 2020

PLANETARY-SCALE THREATS BY THE DIGITAL REVOLUTION

(Draft Version 1)

We have had so much hopes in the positive potentials of the digital revolution, and the biggest threat most people can imagine is an Internet outage or a hacking attack. However, it appears that Social Media, once seen as an opportunity for fairer, participatory society, have (been) turned into promoters of fake news and hate speech. It also turns out that Big Data has empowered businesses and secret services, while in comparison citizens have probably lost power. Exposed to an attention economy and surveillance capitalism, people may become objects of algorithms in an increasingly data-driven and AI-controlled world. Therefore, the question is: After the digital “singularity”, will we be “Gods” in a “digital paradise” – or submitted to a superintelligent system, without fundamental rights, human dignity, and freedom?

Big Data

The digital revolution progresses at a breath-taking speed. Almost every year, there seems to be a new hype.[1] Laptops, mobile phones, smartphones, tablets, Big Data, Artificial Intelligence, Robotics, 3D Printing, Virtual Reality, Augmented Reality, Internet of Things, Quantum Computing, and Blockchain Technology give only a partial picture of the developments. People, lawyers, politicians, the media – they all seem to struggle to keep track of the emerging technologies. Can we create the needed governance frameworks in time?

While many people consider Big Data to be the “oil” of the digital revolution, they consider Artificial Intelligence to be its “motor”. It has become a sport to collect as much data as possible, since business opportunities appear to increase with the amount of data at hand. Accordingly, many excuses have been found to collect data about basically everyone of us, at any time and anywhere. These reasons include

· “to save the world”,

· “for security reasons”,

· “knowledge is power”, and

· “data is the new oil”.

In today’s “surveillance capitalism”,[2] it is not just secret services that spy on us, but private companies as well. We are being “profiled”, which means that highly detailed (pro)files are produced about us. These (pro)files can contain a lot more data than one would think:

– income data

– consumption data

– mobility patterns

– social contacts

– keywords appearing in emails

– search patterns

– reading habits

– viewing patterns

– music taste

– activities at home

– browsing behavior

– voice recordings

– photo contents

– biometrical data

– health data

– and more.

Of course, there is also a lot of other data that is inferred:

– sexual orientation

– religion

– interests

– opinions

– personality

– strengths

– weaknesses

– likely voting behaviors

– and more.

Surveillance Capitalism

You may, of course, wonder why one would record all this data? As I said before, money and power are two of the motivations. Surveillance capitalism basically lives on the data that you provide – either voluntarily or not. However, today, one can basically not use the Internet anymore, if you don’t click “ok” and, thereby, legally agree with a data collection and processing, which you would probably never find acceptable if you read and fully understood the Terms of Use. A lot of statements that tech companies force you to agree with are intentionally misleading or ambiguous. So you would understand them in a different way than they are meant. “We value your privacy”, for example, probably means “We turn your private data into value” rather than “We protect your privacy and, hence, do not collect data about you.”

According to estimates, Gigabytes of data are being collected about everyone in the industrialized world every day. This corresponds to several photographs per day. As we know from the Snowden revelations,[3] secret services accumulate the data of many companies and analyze them in real time. The storage space of the new NSA data center, for example, seems big enough to store up to 140 TeraBytes of data about every human on Earth. This corresponds to dozens of standard hard disks in laptop computers or the storage space of about 1000 standard smartphones today.

You would probably be surprised what one can do with all this data. For example, one thing that the NSA can do is to find you based on your voice profile.[4] So, suppose you go on holiday and decide to leave your smartphone at home for the sake of “digital detox”. However, you happen to talk to someone at the pool bar, who has his or her smartphone right next to him- or herself. Then, this smartphone can pick up your voice, figure out who and where you are, and whom you are talking to. Moreover, some Artificial Intelligence algorithm may turn your conversation into written text, translate it into other languages in real-time, and search for certain “suspicious” keywords. In principle, given that we typically speak just a few hundred or thousand words per day, it would be possible to record and store almost everything we say.

The DARPA project “Lifelog” intended to go even further than that.[5] It wanted to record everyone’s entire life and make it replay-able. You may not be surprised that this project seems to have inspired Facebook, which even creates profiles about people who are not members of Facebook. But this is just the beginning. The plans go far beyond this. As you will learn in this chapter, the believe that you “have nothing to hide” will not be able to protect you.

You are probably familiar with some of the revelations of Edward Snowden related to the NSA, GCHQ, and Five Eyes Alliance. These cover everything from mass surveillance to psychological operations, state-based cybermobbing, hacker armies, and digital weapons.

Most people, however, are not aware of the activities of the CIA, which might be even more dangerous to human rights, in particular as their spying activities can be combined with real-life operations on people worldwide. Some of these activities have been revealed by WikiLeaks under the name “Vault 7”.[6] The leaks show that the CIA is hacking basically all electronic devices, including smart TVs, modern cars, and the Internet of Things. Recently, we have also learned that, in the decades before, the CIA has been spying on more than 100 countries by means of corrupted encryption devices sold by the Crypto AG in Zug.[7]

Digital Crystal Ball

With so much data at hand, one can make all sorts of science-fiction dreams come true. One of them is the idea to create a “digital crystal ball”.[8] Just suppose one could access measurement sensors, perhaps also microphones and cameras of smartphones and other devices in real-time, and put all this information together.

For a digital crystal ball to work, one would not have to access all sensors globally at the same time. It would be enough to access enough devices around the place(s) one is currently interested in. Then, one could follow the events in real time. Using also behavioral data and predictive analytics would even allow one to look a bit into the future, in particularly, if personal agenda data would be accessed as well. I do not need to stress that the above would be very privacy-invasive, but the reader can certainly imagine that a secret service or the military would like to have such a tool, nevertheless.

It is likely that such a digital Crystal Ball already exists. Private companies have worked on this as well. This includes, for example, the company “Recorded Future”, which Google has apparently established together with the CIA,[9] and the company “Palantir”, which seems to work or have worked with Facebook data (among others).[10] Such tools do also play an important role in “predictive policing” (discussed later).

Profiling and Digital Double

In order to offer us personalized products and services (and also personalized prices), companies like to know who we are, what we think and what we do. For this purpose, we are being “profiled”. In other words, a detailed file, a “profile”,[11] is being created about each and every one of us. Of course, these profiles are a lot more detailed than the files that secret services of totalitarian states used to have before the digital revolution. This is quite concerning, because the mechanisms to prevent misuse are currently pretty ineffective. In the worst case, a company would be closed down after years of legal battles, but already the next day, there may be a new company doing a similar kind of business with the same algorithms and data.

Today’s technology even goes a step further, by creating “digital twins” or “digital doubles”.[12] These are personalized, kind of “living” computer agents, which bear our own personal characteristics. You may imagine that there is a black box for every one of us, which is being fed with surveillance data about us.[13] If the black box is not only a data collection, but capable of learning, it can even learn to show our personality features. Cognitive computing[14] is capable of doing just this. As a result, there are companies such as “Crystal Knows”[15] (which used slogans such as “See anyone’s personality”). Apparently it offered to look up personality features of neighbors, colleagues, friends and enemies – like it or not. Several times, I have been confronted with my own profile, in order to figure out how I would respond to the fact that my psychology and personality had been secretly determined without my informed consent. But this is just another ingredient in an even bigger system.

World Simulation (and „Benevolent Dictator“)

The digital doubles may be actually quite sophisticated “cognitive agents”. Based on surveillance data, they may learn to decide and act more or less realistically in a virtual mirror world. This brings us to the “World Simulator”, which seems to exists as well.[16] In this digital copy of the real world, it is possible to simulate various alternative scenarios of the future. This can certainly be informative, but it does not stay there.

People using such powerful simulation tools may not be satisfied with knowing potential future courses of the world – with the aim to be better prepared for what might come upon us. They may also want to use the tool as a war simulator and planning tool.[17] Even if used in a peaceful way, they would like to select a particular future path and make it happen – without proper transparency and democratic legitimation. Using surveillance data and powerful artificial intelligence, one might literally try to “write history”. Some people say, this has already happened, and “Brexit” was socially engineered this way.[18] Later, when we talk about behavioral manipulation, the possibility of such a scenario will become clearer.

Now, suppose that the World Simulator has identified a particularly attractive future scenario, e.g. one with significantly reduced climate change and much higher sustainability. Wouldn’t this intelligent tool know better than us? Shouldn’t we, therefore, ensure that the world will take exactly this path? Shouldn’t we follow the instructions of the World Simulator, as if it was a “benevolent dictator”?

It may sound plausible, but I have questioned this for several reasons.[19] One of them being that, in order to optimize the world, one needs to select a goal function, but there is no science telling us what would be the right one to choose. In fact, projecting the complexity of the world on a one-dimensional function is a gross over-simplification, which is a serious problem. Choosing a different goal function, however, may lead to a different “optimal” scenario, and the actions we would have to perform might be totally different. Hence, if there is no transparency about the goals of the World Simulator, it may easily lead us astray.

Attention Economy

In the age of the “data deluge”, we are being overloaded with information. In the resulting “attention economy,” it is impossible to check all the information we get, and whether it is true or false. We are also not able to explore all reasonable alternatives. When attention is a short resource (perhaps even scarcer than money) people get in a reactive rather than an active or proactive mode. They respond to the information according to a stimulus-response scheme, and tend to do what is suggested to them.[20]

This circumstance makes people “programmable”, but it also creates a competition for our attention. Whoever comes up with the most interesting content, whoever is more visible or louder will win the competition. This mechanisms favors fake news, because they are often more interesting than facts. When Social Media platforms try to maximize the time we are spending on them, they will end promoting fake news and emotional content, in particular hate speech. We can see where this leads.

In the meantime, Social Media platforms face more and more difficulties to promote a constructive dialogue among people. What once started off as a chance for more participatory democracies, has turned into a populistic hate machine. Have Social Media become the weapons of a global information war? Probably so.

Conformity and Distraction

As some people say, Social Media are also used as “weapons of mass distraction”, which increasingly distract us from the real existential problems of the world. They, furthermore, create entirely new possibilities of censorship and propaganda. Before we go into details, however, it is helpful to introduce some basics.

First, I would like to mention the Asch conformity experiment.[21] Here, an experimenter invites a person into a room, in which there are already some other people. The task is simple: everyone has to compare the length of a stick (or line) to three sticks (or lines) of different length and say, which of the three sticks (or lines) it fits.

However, before the experimental subject is asked, everyone else will voice their verdict. If all answer truthfully, the experimental subject will give the right answer, too. However, if the others have consistently given a wrong answer, the experimental subject will be confused – and often give the wrong answer, too. It does not want to deviate from the group opinion, as it fears to appear ridiculous. Psychology speaks of “group pressure” towards conformity.

Propaganda can obviously make use of this fact. It may trick people by the frequent repetition of lies or fake news, which may eventually appear true. It is obviously possible to produce a distorted world view in this way – at least for some time

Second, I would like to mention another famous experiment. Here, there are two baseball teams, for example, one wearing black shirts, the other one wearing white shirts. Observers have to count, say, how often the baseball is passed on by people in white shirts, and how often by people in black shirts. The task requires quite a bit of concentration. It is more demanding to count the two numbers correctly than one might think.

In the end, observers are asked for the numbers – and if they noticed anything particular. Typically, they would answer “no”, even though someone in a Gorilla suit was walking through the scene. In fact, many do not see it. This is called “selective attention”, and it explains why people often do not see “the elephant in the room”, if they are being distracted by something else.

Censorship and Propaganda

The selective attention effect is obviously an inherent element of the attention economy. The conformity effect can be produced by filter bubbles and echo chambers.[22] Both is being used for censorship and propaganda.

In order to understand the underlying mechanism, one needs to know that Social Media do not send messages to a choice of recipients predetermined by the sender (which is in contrast to the way Emails or text messages are being sent). It is an algorithm that decides how many people will see a particular message and who will receive it. Therefore, the Social Media platform can largely determine which messages spread and which ones find little to no attention.

It’s not you who determines the success of your idea, but the Social Media platform. While you are given the feeling that you can change the world by sending tweets and posts, liking and following people, this is far from the truth. Your possibility to shape the future has rather been contained.

Already without deleting a Social Media message, it is quite easy to create propaganda or censorship effects, by amplifying or reducing the number of recipients. In the meantime, algorithms may also mark certain posts as “fake news” or “offensive”, or Social Media platforms may delete certain posts by “cleaners”[23] or in algorithm-based ways. Some communities have learned to circumvent such digital censorship by heavily retweeting certain contents. However, their accounts are now often blocked or shadow-banned as “extremist”, “conspiracy” or “bot-like” accounts.

In fact, the use of propaganda methods are at the heart of today’s Social Media. Before we discuss this in more detail, let us look back in history. Edward Bernays, a nephew of Sigmund Freud, was one of the fathers of modern propaganda. He was an expert in applied psychology and knew how methods used to advertise products (such as frequent repetitions or creating associations with other themes such as success, sex or strength) could be used to promote political or business interests. His book “Propaganda”[24] was used a lot by Joseph Goebbels. In combination with new mass media such as the radio (“Volksempfänger”), the effects were scaled up to an entire country. At that time, people were not prepared to distance themselves from this novel approach of “brain washing”. The result was a dangerous kind of “mass psychology”. It largely contributed to the rise of fascist regimes. World War II and the Holocaust were the outcome.

In the meantime, unfortunately, driven by marketing interests and the desire to exert power, there are even more sophisticated and effective tools to manipulate people. I am not only talking about bots[25] that multiply certain messages to increase their effect. We are also heading towards robot journalism.[26] In the meantime, some AI tools are so convincing story tellers that they have been judged to be too dangerous to release.[27]

Recently, the world seems to have entered a post-factual era[28] and is plagued by fake news. There are even “deep fakes”,[29] i.e. it is possible to manufacture videos of people, in which they say anything you like. The outcome is almost indistinguishable from a real video.[30] One can also modify video recordings in real-time and change somebody’s mimics.[31] In other words, digital tools provide perfect means for manipulation and deception, which can undermine modern societies that are based on informed dialogue and facts. Unfortunately, such “PsyOps” (Psychological Operations) are not just theoretical possibilities.[32] Governments apply them not only to foreign people, but even their own – something that has apparently been legalized recently[33] and made possible by handing over control of the Internet.[34]

Targeting and Behavioral Manipulation

It is frequently said that we consciously perceive only about 10% of the information processed by our brain. The remaining information may influence us as well, but in a subconscious way. Hence, one can use so-called subliminal cues[35] to influence our behavior, while we would not even notice that we have been manipulated. This is also one of the underlying success principles of “nudging”.[36] Putting an apple in front of a muffin will let us chose the apple more frequently. Hence, tricks like these may be used to make us change our behavior.

Some people argue it is anyway impossible to NOT nudge people. Our environment would always influence us in subtle ways. However, I find it highly concerning, when personal data, often collected by mass surveillance, is being used to “target” us specifically and very effectively with personalized information.

We are well aware that our friends are able to manipulate us. Therefore, we choose our friends carefully. Now, however, there are companies that know us better than our friends, which can manipulate us quite effectively, without our knowledge. It is not just Google and Facebook, which try to steer our attention, emotions, opinions, decision and behaviors, but also advertisement companies and others that we do not even know by name. Due to lack of transparency, it is basically impossible to enact our right of informational self-determination or to complain about these companies.

With the personalization of information, propaganda has become a lot more sophisticated and effective than when the Nazis came to power in the 1930ies. People who know that there is almost no webpage or service in the Internet, which is not personalized in some way, even speak of “The Matrix”.[37] Not only may your news consumption be steered, but also your choice of the holiday destination and the partners you date. Humans have become the “laboratory rats” of the digital age. Companies run millions of experiments every day to figure out how to program our behavior ever more effectively.

For example, one of the Snowden revelations has provided insights into the JTRIG program of the British secret service GCHQ.[38] Here, the cognitive biases of humans[39] have been mapped out, and digital ways have been developed to use them to trick us.

While most people think that such means are mainly used in psychological warfare against enemies and secret agents, the power of Artificial Intelligence systems today makes it possible to apply such tricks to millions or even billions of people in parallel.

We know this from experts like the former “social engineer” Tristan Harris,[40] who has previously worked in one of Google’s control rooms, and also from the Cambridge Analytica election manipulation scandal.[41] Such digital tools are rightly classified as digital weapons,[42] since they may distort the world view and perception of entire populations. They could also cause mass hysteria.

Citizen Score and Behavioral Control

Manipulating people by “Big Nudging” (a combination of “nudging” with “Big Data”) does not work perfectly. Therefore, some countries aim at even more effective ways of steering peoples’ behaviors. One of them is known as “Citizen Score”[43] or “Social Credit Score”.[44] This introduces a neo-feudalist system, where rights and opportunities depend on personal characteristics such as behavior or health.

Currently, there seem to be hundreds of behavioral variables that matter in China.[45] For example, if you would pay your rent or your loan with a few days of delay, you would get minus points. If you wouldn’t visit your grandmother often enough, you would get minus points. If you would cross the street during a red light (no matter whether you obstruct anybody else or not), you would get minus points. If you would read critical political news, you would get minus points. If you would have “the wrong kinds of friends” (those with a low score, e.g. those who read critical news), you would get minus points.

Your overall number of points would then determine your Social Credit Score. It would decide about the jobs you can get, the countries you can visit, the interest rate you would have to pay, your possibility to fly or use a train, and the speed of your Internet connection, to mention just a few examples.

In the West, companies are using similar scoring methods. Think, for example, of the credit score, or the Customer Lifetime Value,[46] which are increasingly being used to decide who will receive what kinds of offers or benefits. In other words, people are also ranked in a neo-feudalist fashion. Money reigns in ways that are probably in conflict with the equality principle underlying human rights and democracies.

This does not mean there are no Citizen Scores run by government institutions in the West. It seems, for example, that a system similar to the Social Credit Score has first been invented by the British secret service GCHQ. Even though the state is not allowed to rank the lives of people,[47] there exists a “Karma Police” program,[48] which judges everyone’s value for society. This score considers everything from watching porn to the kind of music you like.[49] Of course, all of this is based on mass surveillance. So, in some Western democracies, we are not far from punishing “thought crimes”.

Digital Policing

This brings us to the subject of digital policing. We must be aware that, besides political power and economic power, there is now a new, digital form of power. It is based on two principles: “knowledge is power” and “code is law”.[50] In other words, algorithms increasingly decide how things work, and what is possible or not. Algorithms introduce new laws into our world, often evading democratic decisions in parliament.

The digital revolution aims at reinventing every aspect of life and finding more effective solutions, also in the area of law enforcement. We all know the surveillance-based fines we have to pay if we are driving above the speed limit on a highway or in a city. The idea of “social engineers” is now to transfer the principle of automated punishment to other areas of life as well. If you illegally download a music or movie file, you may get in trouble. The enforcement of intellectual property, including the use of photos, will probably be a lot stricter in the future. But travelling by plane or eating meat, drinking alcohol or smoking, and a lot of other things might be soon automatically punished as well.

For some, mass surveillance finally offers the opportunity to perfect the world and eradicate crime forever. As in the movie “Minority Report”, the goal is to anticipate – and stop – crime, before it happens. Today’s PreCrime and predictive policing programs already try to implement this idea. Based on criminal activity patterns and a predictive analytics approach, police will be sent to anticipated crime hotspots to stop suspicious people and activities. It is often criticized that the approach is based – intentionally or not – on racial profiling, suppressing migrants and other minorities.

This is partly because predictive policing is not accurate, even though a lot of data is evaluated. Even when using Big Data, there are errors of first and second kind, i.e. false alarms and alarms that do not go off. If the police wants to have a sensitive algorithm that misses out on only very few suspects, the result will be a lot of false alarms, i.e. lists with millions of suspects, who are actually innocent. In fact, in predictive policing applications the rate of false alarms is often above 90%.[51] This requires a lot of manual postprocessing, to remove false positives, i.e. probably innocent suspects. However, there is obviously a lot of arbitrariness involved in this manual cleaning – and hence the risk of applying discriminating procedures.

I should perhaps add that “contract tracing” might be also counted among the digital policing approaches – depending on how a society treats suspected people with infections. In countries such as Israel, in order to identify infected persons it has been decided to apply software, which was originally created to hunt down terrorists. This means that infected people are almost treated like terrorists, which has raised concerns. In particular, it turned out that this military-style contact tracing is not as accurate as expected. Apparently, the software overlooks more than 70% percent of all infections.[52] It has also been found that thousands of people were kept in quarantine, while they were actually healthy.[53] So, it seems that public measures to fight COVID-19 have been misused to put certain kinds of people illegitimately under house arrest. This is quite worrying. Are we seeing here the emergence of a police state 2.0, 3.0, or 4.0?

Cashless Society

The “cashless society” is another vision of how future societies may be organized. Promoters of this idea argue with fighting corruption, easier taxation, and increased hygiene (it would supposedly reduce the spread of harmful diseases such as COVID-19).

At first, creating a cashless society sounds like a good and comfortable idea, but it is often connected with the concept of providing a “digital ID” to people. Sometimes, it is even proposed “for security reasons” to provide people with an RFID chip in their hand or make their identity machine readable with a personalized vaccine. This would make people manageable like things, machines, or data – and thereby violate their human dignity. Such proposals remind of chipping animals or marking inmates with tattoos – and some of the darkest chapters of human history.

Another technology mentioned in connection with the concept of “cashless society” is blockchain technology. It would serve as a registry of transactions, which could certainly help to fight crime and corruption, if reasonably used.

Depending on how such a cashless society would be managed, one could either have a free market or totalitarian control of consumption. Using powerful algorithms, one could manage purchases in real-time. For example, one could determine who can buy what, and who will get what service. Hence, the system may not be much different from the Citizen Score.

For example, if your CO2 tracing indicated a big climate footprint, your attempted car rental or flight booking may be cancelled. If you were a few days late paying your rent, you might not even be able to open the door of your home (assuming it has an electronic lock).

In times of COVID-19, where many people are in a danger of losing their jobs and homes, such a system sounds quite brutal and scary. If we don’t regulate such applications of digital technologies quickly, a data-driven and AI-controlled society with automated enforcement based on algorithmic policing could violate democratic principles and human rights quite dramatically.

Reading and Controlling Minds

If you think what I reported above is already bad enough and it could not get worse, I have to disappoint you. Disruptive technology might even go some steps further. For example, the “U.S. administration’s most prominent science initiative, first unveiled in 2013”[54] aimed at developing new technologies for exploring the brain. The 3 billion Dollar initiative wanted “to deepen understanding of the inner workings of the human mind and to improve how we treat, prevent, and cure disorders of the brain”.[55]

How would it work? In the abstract of a research paper we read:[56] “Nanoscience and nanotechnology are poised to provide a rich toolkit of novel methods to explore brain function by enabling simultaneous measurement and manipulation of activity of thousands or even millions of neurons. We and others refer to this goal as the Brain Activity Mapping Project.”

What are the authors talking about here? My interpretation is that one is considering to put nanoparticles, nanosensors or nanorobots into human cells. This might happen via food, drinks, the air we breathe, or even a special virus. Such nanostructures – so the idea – would allow one to produce a kind of super-EEG. Rather than a few dozens of measurement sensors placed on our head, there would be millions of measurement sensors, which – in perspective – would provide a super-high resolution of brain activities. It might, in principle, be possible to see what someone is thinking or dreaming about.

However, one might not only be able to measure and copy brain contents. It could also become possible to stimulate certain brain activity patterns. With the help of machine learning or AI, one may be able to quickly learn how to do this. Then, one could trigger something like dreams or illusions. One could watch TV without a TV set. To make phone calls, one would not need a smartphone anymore. One could communicate through technological telepathy.[57] For this, someone’s brain activities would be read, and someone else’s activities would be stimulated.

I agree, this all sounds pretty much like science fiction. However, some labs are very serious about such research. They actually expect that this or similar kinds of technology may be available soon.[58] Facebook and Google are just two of the companies preparing for this business, but there are many others you have never heard about. Would they soon be able to read and control your mind?

Neurocapitalism

Perhaps you are not interested in using this kind of technology, but you may not be asked. I am not sure how you could avoid exposure to the nanostructures and radiation that would make such applications possible. Therefore, you may not have much influence on how it would feel to live in the data-driven, AI-controlled society of the future. We may not even notice when the technology is turned on and applied to us, because our thinking and feeling might change slowly and our minds would anyway be controlled.

If things happened this way, today’s “surveillance capitalism” would be replaced by “neurocapitalism”.[59] The companies of the future would not only know a lot about your personality, your opinions and feelings, your fears and desires, your weaknesses and strengths, as it is the case in today’s “surveillance capitalism”. They would also be able to determine your desires and your consumption.

Some people might argue, such mind control would be absolutely justified to improve the sustainability of this planet and improve your health, which would be controlled by an industrial-medical complex. Furthermore, police could stop crimes before they happen. You might not even be able to think about a crime. Your thinking would be immediately “corrected” – which brings us back to “thought crimes” and the “Karma Police” program of the British secret service GCHQ.

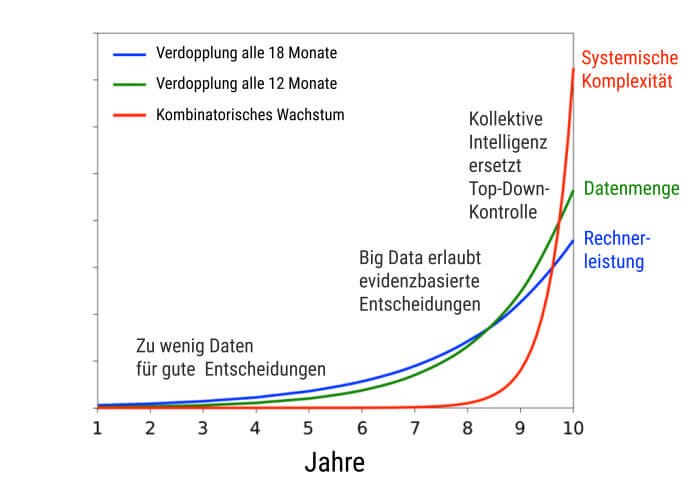

You think, this is all phantasy, and it will never happen? Well, according to IBM, human brain indexing will soon consume several billion Petabytes – a data volume so big that it is beyond imagination of most people. Due to the new business model of brain mapping, the time period over which the amount of data on Earth doubles, would soon drop from 12 months to 12 hours.[60] In other words, in half a day, humanity would produce as much data as in the entire history of humanity before.

A blog on “Integrating Behavioral Health – The Role of Cognitive Computing”[61] elaborates the plans further:

“As population health management gathers momentum, it has become increasingly clear that behavioral health care must be integrated with medical care…

Starting with a “data lake”

To apply cognitive computing to integrated care, the cognitive system must be given multi-sourced data that has been aggregated and normalized so that it can be analyzed. A “data lake”—an advanced type of data warehouse—is capable of ingesting clinical and claims data and mapping it to a normative data model…

A new, unified data model

… The model has predefined structures that include behavioral health. Also included in the model are data on a person’s medical, criminal justice, and socioeconomic histories. The unified data model additionally covers substance abuse and social determinants of health.

Helping to predict the future

Essentially, the end-game is to come up with a model designed for patients that is fine-tuned to recognize evolving patterns of intersecting clinical, behavioral, mental, environmental, and genetic information…

“Ensemble” approach to integrated behavioral health

This “ensemble” approach is already feasible within population health management. But today, it can only be applied on a limited basis to complex cases that include comorbid mental health and chronic conditions…”

You may like it or not: it seems that companies are already working on digital ways to correct behaviors of entire populations. They might be even willing to break your will, if this appears to be justified for a “higher purpose”. Since the discussion about strict COVID-19 countermeasures, we all know that they would certainly find excuses for this…

Human Machine Convergence

By now you may agree that many experts in the Silicon Valley and elsewhere seem to see humans as programmable, biological robots. Moreover, from their perspective, robots would be “better than us”, as soon as super(-human) intelligence exists. Expectations when this will happen range from “fifty years from now” to “superintelligence is already here”.

These experts argue that robots never get tired and never get ill. They don’t demand a salary, social insurance, or holidays. They have superior knowledge and information processing capacity. They would decide in unemotional, rational ways. They would behave exactly as the owner wants them to behave – like slaves in Babylonian or Egyptian times.

Furthermore, transhumanists expect that humans who can afford it, would technologically upgrade themselves as much as they can or want. If you wouldn’t hear well enough, you would buy an audio implant. If you wouldn’t see well enough, you would buy a visual implant. If you wouldn’t think fast enough, you would connect your brain with a supercomputer. If your physical abilities were not good enough, you might buy robot arms or legs. Your biological body parts would be replaced by technology step by step. Eventually, humans and robots would get indistinguishable.[62] Your body would become more powerful and have new senses and additional features – that is the idea. The ultimate goal would be immortality and omnipotence[63] – and the creation of a new kind of human.

Unfortunately, experience tells us that, whenever someone tried to bring a new kind of human(ity) on the way, millions of people were killed. It is shocking that, even though similar developments seem to be on the way again, the responsible political and legal institutions have not taken proper steps to protect us from the possible threat that is coming our way.

Algorithm-Based Dying and Killing

It is unclear how the transhumanist dream[64] would be compatible with human rights, sustainability and world peace. According to the “3 laws of transhumanism,” everyone would want to get as powerful as possible. The world’s resources would not support this and, hence, the system wouldn’t be fair. For some people to live longer, others would have to die early. The resulting principle would be “The survival of the richest”.[65]

Many rich people seem to like this idea, even though ordinary people would have to pay for such life extensions with their lives (i.e. with shorter life spans). Most likely, life-and-death decisions, like everything else, would be taken by IT systems. Some companies have already worked on such algorithms.[66] In fact, medical treatments increasingly depend on decisions of intelligent machines, which consider whether a medical treatment or operation is “a good investment” or not. Old people and people with “bad genes” would probably pay the price.

It seems that even some military people support this way of thinking. In an unsustainable and “over-populated” world, death rates on our planet will skyrocket in this century, at least according to the Club of Rome’s “Limit to Growth” study.[67] Of course, one would not want a World War III to “fix the over-population problem”. One would also not want people to kill each other on the street for a loaf of bread. So, military people and think tanks have been thinking about other solutions, it seems…

When it comes to life-and-death decisions, these people often insist that one must choose the lesser of two evils and refer to the “trolley problem”. In this ethical dilemma, a trolley is assumed to run over a group of, say, 5 railroad workers, if you don’t pull the switch. If you do so, however, it is assumed that one other person will die, say, a child playing on the railway tracks. So, what would you do? Or what should one do?

Autonomous vehicles may sometimes have to take such difficult decisions, too. Their cameras and sensors may be able to distinguish different kinds of people (e.g. a mother vs. a child), or they may even recognize the person (e.g. a manager vs. an unemployed person). How should the system decide? Should it use a Citizen Score, which summarizes someone’s “worth for society,” perhaps considering wealth, health, and behavior?

Now, assume politicians would eventually come up with a law determining how algorithms of autonomous systems (such as self-driving cars) should take life-and-death decisions in order to save valuable lives. Furthermore, assume that, later on, the world would run into a sustainability crisis, and there were not enough resources for everyone. Then, the algorithms originally created to save valuable lives would turn into killer algorithms, which would “sort out” people that are “too much” – potentially thousands or millions of people. Such “triage” arguments have, in fact, been recently made, for example in the early phase of COVID-19 response.[68]

Such military-style response strategies[69] have apparently been developed for times of “unpeace”, as some people call it. However, they would imply the targeted killing of unconsenting civilians, which is forbidden even in wartimes.[70] Such a “military solution” would come pretty close to concepts known as “eugenics” and “euthanasia,”[71] which reminds of some of the darkest chapters of human history. For sure, it would not be suited as basis of a civil-ization (which has the word “civil” in it for a reason).

In conclusion, algorithm-based life-and-death decisions are not an acceptable “solution to over-population”. If this was the only way to stabilize a socio-economic system, the system itself would obviously have to be changed. Even if people would not be killed by drones or killer robots, but painlessly, I doubt that algorithmically based death could ever be called ethical or moral. It is unlikely that fellow humans or later generations would ever forgive those who have brought such a system on the way. In fact, in the meantime, scientists, philosophers, and ethics committees increasingly speak up against the use of a Citizen Score in connection with life-and-death decisions, whatever personal data it may be based on; most people like to be treated equally and in a fair way.[72]

Technological Totalitarianism and Digital Fascism

Summarizing the previous sections about worrying digital developments, I conclude that the greatest opportunity in a century may easily turn into the greatest crime against humanity, if we don’t take proper precautions. Of course, I don’t deny the many positive potentials of the digital revolution. However, presently, it appears we are in an acute danger of heading towards a terrible nightmare called “technological totalitarianism”, which seems to be a mix of fascism, feudalism and communism, digitally reinvented. This may encompass some or all of the following elements:

- mass surveillance,

- profiling and targeting,

- unethical experiments with humans,

- censorship and propaganda,

- mind control or behavioral manipulation,

- social engineering,

- forced conformity,

- digital policing,

- centralized control,

- different valuation of people,

- messing with human rights,

- humiliation of minorities,

- digitally based eugenics and/or euthanasia.

This technological totalitarianism has been hiding well behind the promises and opportunities of surveillance capitalism, behind the “war on terror”, behind the need for “cybersecurity”, and behind the call “to save the world” (e.g. to measure, tax, and reduce the CO2 consumption on an individual level). Sadly, politicians, judges, journalists and others have so far allowed these developments to happen.

These developments are not by coincidence, however. They started shortly after World War II, when Nazi elites had to leave Germany. Through “Operation Paperclip” and similar operations,[73] they were transferred to the United States, Russia and other countries around the world. There, they have often worked in secret services and secret research programs. This is, how Nazi thinking has spread around the world, particularly in countries that aimed at power. By now, the very core of human dignity and the foundations of many societies are at stake. Perhaps the world has never seen a greater threat before.

Singularity and Digital God

Some people think what we are witnessing now is an inevitable, technology-driven development. For example, it comes under the slogan “The singularity is near”.[74] According to this, we will soon see superintelligence, i.e. an Artificial Intelligence system with super-human intelligence and capability. This system is imagined to learn and gain intelligence at an accelerating pace, and so, it would eventually know everything better than humans.

Shouldn’t one then demand that humans do what this kind of all-knowing superintelligence demands from them, as if it was a “digital God”?[75] Wouldn’t everything else be irrational and a “crime against humanity and nature”, given the existential challenges our planet is faced with? Wouldn’t we become something like “cells” of a new super-organism called “humanity”, managed by a superintelligent brain? It seems some people cannot imagine anything else.

In the very spirit of transhumanism, they would happily engage in building such a super-brain – a kind of “digital God” that is as omniscient, omnipresent, and omnipotent as possible with today’s technology. This “digital God” would be something like a super-Google that would know our wishes and could manipulate our thinking, feeling, and behavior. Once AI can even trigger specific brain activities, it could even give us the feeling we have met God. The fake (digital) God could create fake spiritual experiences. It could make us believe we have met the God that world religions tell us about – finally it was here, and it was taking care of our lives…

Do you think we could possibly stop disruptive innovators from working on the implementation of this idea? After digitally reinventing products and services, administrative and decision processes, legal procedures and law enforcement, money and business, would they stay away from reinventing religion and from creating an artificial God? Probably not.[76]

Indeed, previous Google engineer Anthony Levandowski has already established a new religion, which believes in Artificial Intelligence as God.[77] Even before, there was a “Reformed Church of Google”. The related Webpage[78] contains various proofs of “Google Is God,” “Prayers,” and “Commandments”. Of course, many people would not take such a thing seriously. Nevertheless, some powerful people may be very serious about establishing AI as a new God and making us obey its “commandments”,[79] in the name of “fixing the world”.

This does not mean, of course, that this idea would have to become reality. However, if you asked me, if and when such a system would be built, I would answer: “It probably exists already, and it might hide behind a global cybersecurity center, which collects all the data needed for such a system.” It may be just a matter of time and opportunity to turn on the full functionality of the Artificial Intelligence system that knows us all, and to give it more or less absolute powers. It seems that, with the creation of a man-made, artificial, technological God, the ultimate Promethean dream would become true. After reading the next section, you might even wonder, whether it is perhaps the ultimate Luziferian dream…

Apocalyptic AI

I would not be surprised if you found the title of this section far-fetched. However, the phrase “apocalyptic AI” is not my own invention – it is the title of a academic book[80] summarizing the thinking of a number of AI pioneers. The introduction of this book says it all:

“Apocalyptic AI authors promise that intelligent machines – our “mind children,” according to Moravec – will create a paradise for humanity in the short term but, in the long term, human beings will need to upload their minds into machine bodies in order to remain a viable life-form. The world of the future will be a transcendent digital world; mere human beings will not fit in. In order to join our mind children in life everlasting, we will upload our conscious minds into robots and computers, which will provide us with the limitless computational power and effective immortality that Apocalyptic AI advocates believe make robot life better than human life.

I am not interested in evaluating the moral worth of Apocalyptic AI…”

Here, we notice a number of surprising points: “apocalyptic AI” is seen as a positive thing, as technology is imagined to make humans immortal, by uploading our minds into a digital platform. This is apparently expected to be the final stage of human-machine convergence and the end goal of transhumanism. However, humans as we know them today would be extinct.[81] This reveals transhumanism as a misanthropic technology-based ideology and, furthermore, a highly dangerous, “apocalyptic” end time cult.

Tragically, this new technolog-based religion has been promoted by high-level politics, for example, the Obama administration.[82] To my surprise, the first time I encountered “apocalyptic AI” was at an event in Berlin on October 28, 2018,[83] which was apparently supported by government funds. The “ÖFIT 2018 Symposium” on “Artificial Intelligence as a Way to Create Order” [in German: “Künstliche Intelligenz als Ordnungsstifterin”] took place at “Silent Green”, a previous crematory. Honestly, I was shocked and thought the place was more suited to warn us of a possible “digital holocaust” than to make us believe in a digital God.

However, those believing in “apocalyptic AI”, among them leading AI experts, seem to believe that “apocalyptic AI” would be able to bring us “transcendent eternal existence” and the “golden age of peace and prosperity” promised in the Bible Apocalypse. At Amazon, for example, the book is advertised with the words:[84]

“Apocalyptic AI, the hope that we might one day upload our minds into machines or cyberspace and live forever, is a surprisingly wide-spread and influential idea, affecting everything from the world view of online gamers to government research funding and philosophical thought. In Apocalyptic AI, Robert Geraci offers the first serious account of this „cyber-theology“ and the people who promote it.

Drawing on interviews with roboticists and AI researchers and with devotees of the online game Second Life, among others, Geraci illuminates the ideas of such advocates of Apocalyptic AI as Hans Moravec and Ray Kurzweil. He reveals that the rhetoric of Apocalyptic AI is strikingly similar to that of the apocalyptic traditions of Judaism and Christianity. In both systems, the believer is trapped in a dualistic universe and expects a resolution in which he or she will be translated to a transcendent new world and live forever in a glorified new body. Equally important, Geraci shows how this worldview shapes our culture. Apocalyptic AI has become a powerful force in modern culture. In this superb volume, he shines a light on this belief system, revealing what it is and how it is changing society.”

I also recommend to read the book review over there by “sqee” posted on December 16, 2010. It summarizes the apocalyptic elements of the Judaic/Christian theology, which some transhumanists are now trying to engineer, including:

“A. A belief that there will be an irreversible event on a massive scale (global) after which nothing will ever be the same (in traditional apocalypses, the apocalypse itself; in Apocalyptic AI ideology, an event known as „the singularity“)

B. A belief that after the apocalypse/singularity, rewards will be granted to followers/adherents/believers that completely transform the experience of life as we know it” (while the others are apparently doomed).

Personally, I don’t believe in this vision. I rather consider the above as an “apocalyptic” worst-case scenario that may happen if we don’t manage to avert attempts to steer human behaviors and minds, and submit humanity to a (digital) control systemIt is clear that such a system would not establish the golden age of peace and prosperity, but would be an “evil” totalitarian system that would challenge humanity altogether. Even though some tech companies and visionaries seem to favor such developments, we should stay away from them – in agreement with democratic constitutions and the United Nation’s Universal Declaration of Human Rights.

It is high time to challenge the “technology-driven” approach. Technology should serve humans, not the other way round. In fact, in other blogs I have illustrated, human-machine convergence is not the only possible future scenario. I would say there are indeed much better ways of using digital technologies than what we saw above.

REFERENCES

[1] See the Gartner Hype Cycle, https://en.wikipedia.org/wiki/Hype_cycle

[2] S. Zuboff (2019) The Age of Surveillance Capitalism: The Fight for a Human Future at the New Frontier of Power (PublicAffairs).

[3] See https://en.wikipedia.org/wiki/Edward_Snowden, https://www.theguardian.com/us-news/the-nsa-files, https://www.theguardian.com/world/interactive/2013/nov/01/snowden-nsa-files-surveillance-revelations-decoded

[4] Finding your voice, The Intercept (January 19, 2018) https://theintercept.com/2018/01/19/voice-recognition-technology-nsa/

[5] See https://en.wikipedia.org/wiki/DARPA_LifeLog (accessed August 4, 2020); https://www.vice.com/en_us/article/vbqdb8/15-years-ago-the-military-tried-to-record-whole-human-lives-it-ended-badly

[6] See https://en.wikipedia.org/wiki/Vault_7, https://wikileaks.org/ciav7p1/, https://wikileaks.org/vault7/

[7] See https://www.bbc.com/news/world-europe-51487856, https://www.theguardian.com/us-news/2020/feb/11/crypto-ag-cia-bnd-germany-intelligence-report

[8] Can the Military Make A Prediction Machine?, Defense One (April 8, 2015) https://www.defenseone.com/technology/2015/04/can-military-make-prediction-machine/109561/

[9] Exclusive: Google, CIA invest in ‘Future” of Web Monitoring, Wired (July 28, 2010)

https://www.wired.com/2010/07/exclusive-google-cia/

[10] Palantir knows everything about you, Bloomberg (April 18, 2020) https://www.bloomberg.com/features/2018-palantir-peter-thiel/

[11] See https://en.wikipedia.org/wiki/Profiling_(information_science)

[12] See https://en.wikipedia.org/wiki/Digital_twin

[13] See also F. Pasquale, The Black Box Society: The Secret Algorithms That Control Money and Information (Harvard University Press, 2016)

[14] See https://en.wikipedia.org/wiki/Cognitive_computing, https://www.forbes.com/sites/bernardmarr/2016/03/23/what-everyone-should-know-about-cognitive-computing/

[15] See https://www.crystalknows.com

[16] See https://en.wikipedia.org/wiki/Synthetic_Environment_for_Analysis_and_Simulations, https://emerj.com/ai-future-outlook/nsa-surveillance-and-sentient-world-simulation-exploiting-privacy-to-predict-the-future/, https://cointelegraph.com/news/us-govt-develops-a-matrix-like-world-simulating-the-virtual-you

[17] Sentient world: war games on the grandest scale, The Register (June 23, 2007) https://www.theregister.com/2007/06/23/sentient_worlds/

[18] Brexit – How the British People Were Hacked, Global Research (November 23, 2017) https://www.globalresearch.ca/brexit-how-the-british-people-were-hacked/5619574, Brexit – a Game of Social Engineering with No Winners, Medium (June 4, 2019) https://medium.com/@viktortachev/brexit-a-game-of-social-engineering-and-no-winners-f817529506f4; see also the books by Cambridge Analytica Insiders Christopher Wylie, https://www.amazon.com/Mindf-Inside-Cambridge-Analyticas-Break/dp/1788164997/, and Brittany Kaiser, https://www.amazon.com/Targeted-Cambridge-Analytica-Whistleblowers-Democracy/dp/0062965794/

[19] D. Helbing and E. Pournaras, Build Digital Democracy, Nature 527, 33-34 (2015) https://www.nature.com/news/society-build-digital-democracy-1.18690; D. Helbing, Why We Need Democracy 2.0 and Capitalism 2.0 to Survive (2016) https://www.researchgate.net/publication/303684254

[20] D. Kahneman (2013) Thinking Fast and Slow (Farrar, Straus, and Giroux), https://www.amazon.com/Thinking-Fast-Slow-Daniel-Kahneman/dp/0374533555/

[21] See https://en.wikipedia.org/wiki/Asch_conformity_experiments, https://www.youtube.com/watch?v=TYIh4MkcfJA

[22] E. Pariser, The Filter Bubble: How the New Personalized Web Is Changing What We Read and How We Think (Penguin, 2012) https://www.amazon.com/Filter-Bubble-Personalized-Changing-Think/dp/0143121235/

[23] See https://de.wikipedia.org/wiki/Im_Schatten_der_Netzwelt, http://www.thecleaners-film.de

[24] E. Bernays, Propaganda (Ig, 2004) https://www.amazon.com/Propaganda-Edward-Bernays/dp/0970312598/

[25] See https://en.wikipedia.org/wiki/Social_bot

[26] The Rise of the Robot Reporter, The New York Times (February 5, 2019) https://www.nytimes.com/2019/02/05/business/media/artificial-intelligence-journalism-robots.html, https://emerj.com/ai-sector-overviews/automated-journalism-applications/

[27] New AI fake text generator may be too dangerous to release, say creators, The Guardian (February 14, 2019) https://www.theguardian.com/technology/2019/feb/14/elon-musk-backed-ai-writes-convincing-news-fiction

[28] See https://en.wikipedia.org/wiki/Post-truth_politics

[29] https://news.artnet.com/art-world/mark-zuckerberg-deepfake-artist-1571788

https://vimeo.com/341794473

[30] Adobe’s Project VoCo Lets You Edit Speech As Easily As Text, TechCrunch (November 3, 2016) https://techcrunch.com/2016/11/03/adobes-project-voco-lets-you-edit-speech-as-easily-as-text/, https://www.youtube.com/watch?v=I3l4XLZ59iw

[31] Face2Face: Real-Time Face Capture and Reenactment of Videos, Cinema5D (April 9, 2016) https://www.cinema5d.com/face2face-real-time-face-capture-and-reenactment-of-videos/

[32] How Covert Agents Infiltrate the Internet …, The Intercept (February 25, 2014) https://theintercept.com/2014/02/24/jtrig-manipulation/, Sentient world: war games on the grandest scale, The Register (June 23, 2007) https://www.theregister.com/2007/06/23/sentient_worlds/

[33] https://en.wikipedia.org/wiki/Smith–Mundt_Act

https://en.wikipedia.org/wiki/Countering_Foreign_Propaganda_and_Disinformation_Act

[34] An Internet Giveaway to the U.N., Wall Street Journal (August 28, 2016) https://www.wsj.com/articles/an-internet-giveaway-to-the-u-n-1472421165

[35] See https://en.wikipedia.org/wiki/Subliminal_stimuli

[36] See https://en.wikipedia.org/wiki/Nudge_theory; R.H. Thaler and C.R. Sunstein, Nudge (Yale University Press, 2008) https://www.amazon.com/Nudge-Improving-Decisions-Health-Happiness/dp/0300122233/

[37] Tech Billionaires Convinced We Live in The Matrix Are Secretly Funding Scientists to Help Break Us Out of It, Independent (October 6, 2016) https://www.independent.co.uk/life-style/gadgets-and-tech/news/computer-simulation-world-matrix-scientists-elon-musk-artificial-intelligence-ai-a7347526.html

[38] Joint Threat Research Intelligence Group, https://en.wikipedia.org/wiki/Joint_Threat_Research_Intelligence_Group; Controversial GCHQ Unit Engaged in Domestic Law Enforcement, Online Propaganda, Psychological Research, The Intercept (June 22, 2015) https://theintercept.com/2015/06/22/controversial-gchq-unit-domestic-law-enforcement-propaganda/

[39] See https://en.wikipedia.org/wiki/List_of_cognitive_biases

[40] Tristan Harris, How a handful of tech companies controls billions of minds every day, https://www.youtube.com/watch?v=C74amJRp730 (July 28, 2017)

[41] Fresh Cambridge Analytica leak ‘shows global manipulation is out of control’, The Guardian (January 4, 2020) https://www.theguardian.com/uk-news/2020/jan/04/cambridge-analytica-data-leak-global-election-manipulation

[42] Before Trump, Cambridge Analytica quietly built “psyops” for militaries, FastCompany (September 25, 2019) https://www.fastcompany.com/90235437/before-trump-cambridge-analytica-parent-built-weapons-for-war; Meet the weaponized propaganda I that knows you better than you know yourself, ExtremeTech (March 1, 2017) https://www.extremetech.com/extreme/245014-meet-sneaky-facebook-powered-propaganda-ai-might-just-know-better-know; The Rise of the Weaponized AI Propaganda Machine, Medium (February 13, 2017) https://www.fastcompany.com/90235437/before-trump-cambridge-analytica-parent-built-weapons-for-war

[43] ACLU: Orwellian Citizen Score, China‘s credit score system, is a warning for Americans, Computerworld (October 7, 2015) https://www.computerworld.com/article/2990203/aclu-orwellian-citizen-score-chinas-credit-score-system-is-a-warning-for-americans.html

[44] See https://en.wikipedia.org/wiki/Social_Credit_System

[45] How China Is Using „Social Credit Scores“ to Reward and Punish Its Citizens, Time (2019) https://time.com/collection/davos-2019/5502592/china-social-credit-score/

[46] See https://en.wikipedia.org/wiki/Customer_lifetime_value

[47] Deutscher Ethikrat: „Der Staat darf menschliches Leben nicht bewerten“, ZEIT (March 27, 2020) https://www.zeit.de/gesellschaft/zeitgeschehen/2020-03/deutscher-ethikrat-coronavirus-behandlungsreihenfolge-infizierte

[48] British ‘Karma Police’ program carries out mass surveillance of the web, The Verge (September 25, 2015) https://www.theverge.com/2015/9/25/9397119/gchq-karma-police-web-surveillance

[49] Profiled: From Radio to Porn, British Spies Track Web Users’ Online Identities, The Intercept (September 25, 2015) https://www.theverge.com/2015/9/25/9397119/gchq-karma-police-web-surveillance

[50] L. Lessig, Code is Law: On Liberty in Cyberspace, Harvard Magazine (January 1, 2000) https://harvardmagazine.com/2000/01/code-is-law-html, https://en.wikipedia.org/wiki/Code_and_Other_Laws_of_Cyberspace

[51] Überwachung von Flugpassagieren liefert Fehler über Fehler, Süddeutsche Zeitung (April 24, 2019) https://www.sueddeutsche.de/digital/fluggastdaten-bka-falschtreffer-1.4419760; 100,000 false positives for every real terrorist: Why anti-terror algorithms don’t work, First Monday (2017) https://firstmonday.org/ojs/index.php/fm/article/download/7126/6522

[52] Zweite Welle im Vorzeigeland – was wir von Israel lernen können, WELT (July 8, 2020) https://www.welt.de/politik/ausland/article211232079/Israel-Warum-im-Vorzeigeland-jetzt-wieder-ein-Lockdown-droht.html

[53] 12,000 Israelis mistakenly quarantined by Shin Bet’s tracking system, The Jerusalem Post (July 15, 2020) https://www.jpost.com/cybertech/12000-israelis-mistakenly-quarantined-by-shin-bets-tracking-system-635154

[54] Rewriting Life: Obama’s Brain Project Backs Neurotechnology, MIT Technology Review (September 30, 2014), https://www.technologyreview.com/2014/09/30/171099/obamas-brain-project-backs-neurotechnology/

[55] The BRAIN Initiative Misson, https://www.braininitiative.org/mission/ (accessed on July 31, 2020).

[56] A.P. Alivisatos et al. (2013) Nanotools for Neuroscience and Brain Activity Mapping, ACS Nano 7 (3), 1850-1866, https://pubs.acs.org/doi/10.1021/nn4012847

[57] Is Tech-Boosted Telepathy on Its Way? Forbes (December 4, 2018) https://www.forbes.com/sites/forbestechcouncil/2018/12/04/is-tech-boosted-telepathy-on-its-way-nine-tech-experts-weigh-in/

[58] https://www.cnbc.com/2017/07/07/this-inventor-is-developing-technology-that-could-enable-telepathy.html

https://www.technologyreview.com/2017/04/22/242999/with-neuralink-elon-musk-promises-human-to-human-telepathy-dont-believe-it/

[59] Brain-reading tech is coming. The law is not ready to protect us. Vox (December 20, 2019), https://www.vox.com/2019/8/30/20835137/facebook-zuckerberg-elon-musk-brain-mind-reading-neuroethics (accessed on July 31, 2020); What Is Neurocapitalism aand Why Are We Living In It?, Vice (October 18, 2016), https://www.vice.com/en_us/article/qkjxaq/what-is-neurocapitalism-and-why-are-we-living-in-it (accessed on July 31, 2020); M. Meckel (2018) Mein Kopf gehört mir: Eine Reise durch die schöne neue Welt des Brainhacking, Piper, https://www.amazon.com/Mein-Kopf-gehört-mir/dp/3492059074/

[60] Knowledge Doubling Every 12 Months, Soon to be Every 12 Hours (April 19, 2013) https://www.industrytap.com/knowledge-doubling-every-12-months-soon-to-be-every-12-hours/3950 (accessed July 31, 2020), refers to http://www-935.ibm.com/services/no/cio/leverage/levinfo_wp_gts_thetoxic.pdf

[61] https://www.ibm.com/blogs/watson-health/integrating-behavioral-health-role-cognitive-computing/ (accessed September 5, 2018)

[62] “In Zukunft werden wir Mensch und Maschine wohl nicht mehr unterscheiden können“, Neue Zürcher Zeitung (August 22, 2019), https://www.nzz.ch/zuerich/mensch-oder-maschine-interview-mit-neuropsychologe-lutz-jaencke-ld.1502927 (accessed on July 31, 2020).

[63] According to the „Teleological Egocentric Functionalism“, as expressed by the „3 laws of transhumanism“ (see Zoltan Istvan’s “The Transhumanist Wager”, https://en.wikipedia.org/wiki/The_Transhumanist_Wager, accessed on July 31, 2020):

1) A transhumanist must safeguard one’s own existence above all else.

2) A transhumanist must strive to achieve omnipotence as expediently as possible–so long as one’s actions do not conflict with the First Law.

3) A transhumanist must safeguard value in the universe–so long as one’s actions do not conflict with the First and Second Laws.

[64] It’s Official, the Transhuman Era Has Begun, Forbes (August 22, 2018), https://www.forbes.com/sites/johnnosta/2018/08/22/its-official-the-transhuman-era-has-begun/ (accessed on July 31, 2020)

[65] D. Rushkoff, Future Human: Surival of the Richest, OneZero (July 5, 2018) https://onezero.medium.com/survival-of-the-richest-9ef6cddd0cc1

[66] Big-Data-Algorithmen: Wenn Software über Leben und Tod entscheidet, ZDF heute (December 20, 2017), https://web.archive.org/web/20171222135350/https://www.zdf.de/nachrichten/heute/software-soll-ueber-leben-und-tod-entscheiden-100.html

Was Sie wissen müssen, wenn Dr. Big-Data bald über Leben und Tod entscheidet: „Wir sollten Maschinen nicht blind vertrauen“, Medscape (March 7, 2018) https://deutsch.medscape.com/artikelansicht/4906802

[67] D.H. Meadows, Limits to Growth (Signet, 1972) https://www.amazon.com/Limits-Growth-Donella-H-Meadows/dp/0451057678/; D.H. Meadows, J. Randers, and D.L. Meadows, Limits to Growth: The 30-Year Update (Chelsea Green, 2004).

[68] https://www.ncbi.nlm.nih.gov/pmc/articles/PMC6642460/

https://www.ncbi.nlm.nih.gov/pmc/articles/PMC6642460/pdf/JMEHM-12-3.pdf

https://www.tagesspiegel.de/wissen/die-grausamkeit-der-triage-der-moment-wenn-corona-aerzte-ueber-den-tod-entscheiden/25650534.html

https://www.vox.com/coronavirus-covid19/2020/3/31/21199721/coronavirus-covid-19-hospitals-triage-rationing-italy-new-york

https://scholarship.law.columbia.edu/cgi/viewcontent.cgi?article=3022&context=faculty_scholarship

https://www.abc.net.au/religion/covid19-and-the-trolley-problem/12312370

https://www.tandfonline.com/doi/full/10.1080/17470919.2015.1023400

[69] R. Arkin (2017) Governing Lethal Behavior in Autonomous Robots (Chapman and Hall/CRC) https://www.amazon.com/Governing-Lethal-Behavior-Autonomous-Robots-dp-1138435821/; R. Sparrow, Can Machines Be People? Reflections on the Turing Triage Test, https://researchmgt.monash.edu/ws/portalfiles/portal/252781337/2643143_oa.pdf

[70] Ethisch sterben lassen – ein moralisches Dilemma, Neue Zürcher Zeitung (March 23, 2020), https://www.nzz.ch/meinung/ethisch-sterben-die-gefahr-der-moralischen-entgleisung-ld.1542682

[71] F. Hamburg (2005) Een Computermodel Voor Het Ondersteunen van Euthanasiebeslissingen (Maklu) https://books.google.de/books?id=eXqX0Ls4wGQC

[72] J. Nagler, J. van den Hoven, and D. Helbing (August 21, 2017) An Extension of Asimov‘s Robotic Laws, https://www.researchgate.net/publication/319205931_An_Extension_of_Asimov%27s_Robotics_Laws;

B. Dewitt, B. Fischhoff, and N.-E. Sahlin, ‚Moral machine’ experiment is no basis for policymaking, Nature 567, 31 (2019) https://www.nature.com/articles/d41586-019-00766-x;

Y.E. Bigman and K. Gray, Life and death decisions of autonomous vehicles, Nature 579, E1-E2 (2020)

https://www.nature.com/articles/s41586-020-1987-4;

Automatisiertes und vernetztes Fahren, Bericht der Ethikkommission, Bundesministerium für Verkehr und digitale Infrastruktur (June 2017)

https://www.bmvi.de/SharedDocs/DE/Publikationen/DG/bericht-der-ethik-kommission.pdf, https://www.researchgate.net/publication/318340461;

COVID-19 pandemic: triage for intensive-care treatment under resource scarcity, Swiss Medical Weekly (March 24, 2020) https://smw.ch/article/doi/smw.2020.20229;

Deutscher Ethikrat: “Der Staat darf menschliches Leben nicht bewerten”, ZEIT (March 27, 2020) https://www.zeit.de/gesellschaft/zeitgeschehen/2020-03/deutscher-ethikrat-coronavirus-behandlungsreihenfolge-infizierte, https://www.ethikrat.org/fileadmin/Publikationen/Ad-hoc-Empfehlungen/deutsch/ad-hoc-empfehlung-corona-krise.pdf

[73] See https://en.wikipedia.org/wiki/Operation_Paperclip and https://www.cia.gov/library/center-for-the-study-of-intelligence/csi-publications/csi-studies/studies/vol-58-no-3/operation-paperclip-the-secret-intelligence-program-to-bring-nazi-scientists-to-america.html

[74] R. Kurzweil, The Singularity Is Near (Penguin, 2007) https://www.amazon.com/Singularity-Near-Publisher-Penguin-Non-Classics/dp/B004WSS8FU/

[75] Of course, not. If the underlying goal function of the system would be changed only a little (or if the applied dataset would be updated), this might imply completely different command(ment)s… In scientific terms, this problem is known as “sensitivity”.

[76] W. Indick (2015) The Digital God: How Technology Will Reshape Spirituality (McFarland), https://www.amazon.com/Digital-God-Technology-Reshape-Spirituality/dp/0786498927

[77] Inside the First Church of Artificial Intelligence, Wired (November 15, 2017) https://www.wired.com/story/anthony-levandowski-artificial-intelligence-religion/

[78] https://churchofgoogle.org (accessed on July 31, 2020)

[79] An AI god will emerge by 2042 and write its own bible. Will you worship it? Venture Beat (October 2, 2017) https://venturebeat.com/2017/10/02/an-ai-god-will-emerge-by-2042-and-write-its-own-bible-will-you-worship-it/

[80] R.M. Geraci (2010) Apocalyptic AI: Visions of Heaven in Robotics, Artificial Intelligence, and Virtual Reality (Oxford University Press), https://www.amazon.de/Apocalyptic-AI-Robotics-Artificial-Intelligence/dp/0195393023

[81] Interview zu künstlicher Intelligenz: “Der Nebeneffekt wäre, dass die Menschheit dabei ausgerottet würde“, watson (May 11, 2015), https://www.watson.ch/digital/wissen/533419807-kuenstliche-intelligenz-immenses-potential-und-noch-groesseres-risiko

[82] The Age of Transhumanist Politics Has Begun, TELEPOLIS (April 12, 2015), https://www.heise.de/tp/features/The-Age-of-Transhumanist-Politics-Has-Begun-3371228.html (accessed on July 31, 2020)

[83] #ÖFIT2018 – Keynote Prof. Robert Geraci PhD, https://www.youtube.com/watch?v=bD1haPjC6kY

[84] https://www.amazon.com/Apocalyptic-AI-Robotics-Artificial-Intelligence/dp/0199964009 (accessed on July 31, 2020)

Posted by Dirk Helbing at 11:52

Dirk Helbing about the struggle for our future

Friday, 10 July 2020

The Corona Crisis Reveals the Struggle for Our Future

by Dirk Helbing

For a long time, experts have warned of the implications of a non-sustainable world, but few have understood the implications. Today’s economic system would enter a terminal phase, before a new kind of system would raise from the ashes. For sure, the digital revolution allowed the machinery of utility maximization to reach new heights. Today’s surveillance capitalism sells our digital doubles, in other words: detailed digital copies of our lives. It became increasingly clear that we would be next. People were already talking about the value of life, which, from a market point of view, could be pretty little – considering the fact of over-population and the coming wave of robotic automation. I have warned that some have worked on systems that would autonomously decide over lives and deaths of people, based on a citizen score reflecting what their “systemic value” was claimed to be.

Then came the Corona Virus. Even though the world had been warned in advance of the next great pandemic to come, COVID-19 hit the world surprisingly unprepared. Even though it started to spread in early December 2019, there was a shortage not only of respirators, but also of disinfectants and face masks as late as April 2020. And so, many people died an early death. Some doctors took triage decisions as in war times, and old or seriously ill people did not stand good chances to be helped. Some doctors relied on “terminal care”: basically, they gave opiates and sleeping pills to patients they could not save, and put them to death.

Das kalte Paradies

Dirk Helbing, Professor für Computational Social Science an der ETH Zürich, spricht über die Ära der Digitalisierung und die Herausforderung, deren Möglichkeiten zum Vorteil der Zivilgesellschaft zu nutzen.

Dirk Helbing im Interview mit Manuela Lenzen, Februar 2020

https://www.wiko-berlin.de/wikothek/koepfe-und-ideen/issue/15/das-kalte-paradies/

The Automation of Society is Next: How to Survive the Digital Revolution by Dirk Helbing :: SSRN

The explosion in data volumes, processing power, and Artificial Intelligence, known as the „digital revolution“, has driven our world to a dangerous p

Source: The Automation of Society is Next: How to Survive the Digital Revolution by Dirk Helbing :: SSRN

Digitale Demokratie statt Datendiktatur

Digitale Demokratie statt Datendiktatur

Big Data, Nudging, Verhaltenssteuerung: Droht uns die Automatisierung der Gesellschaft durch Algorithmen und künstliche Intelligenz? Ein gemeinsamer Appell zur Sicherung von Freiheit und Demokratie.

Alles wird intelligent: Bald haben wir nicht nur Smartphones, sondern auch Smart Homes, Smart Factories und Smart Cities. Erwarten uns am Ende der Entwicklung Smart Nations und ein smarter Planet?

Bild vergrößernDirk Helbing

In der Tat macht das Gebiet der künstlichen Intelligenz atemberaubende Fortschritte. Insbesondere trägt es zur Automatisierung der Big-Data-Analyse bei. Künstliche Intelligenz wird nicht mehr Zeile für Zeile programmiert, sondern ist mittlerweile lernfähig und entwickelt sich selbstständig weiter. Vor Kurzem lernten etwa Googles DeepMind-Algorithmen autonom, 49 Atari-Spiele zu gewinnen. Algorithmen können nun Schrift, Sprache und Muster fast so gut erkennen wie Menschen und viele Aufgaben sogar besser lösen. Sie beginnen, Inhalte von Fotos und Videos zu beschreiben. Schon jetzt werden 70 Prozent aller Finanztransaktionen von Algorithmen gesteuert und digitale Zeitungsnews zum Teil automatisch erzeugt. All das hat radikale wirtschaftliche Konsequenzen: Algorithmen werden in den kommenden 10 bis 20 Jahren wohl die Hälfte der heutigen Jobs verdrängen. 40 Prozent der Top-500-Firmen werden in einem Jahrzehnt verschwunden sein.

Es ist absehbar, dass Supercomputer menschliche Fähigkeiten bald in fast allen Bereichen übertreffen werden – irgendwann zwischen 2020 und 2060. Inzwischen ruft dies alarmierte Stimmen auf den Plan. Technologievisionäre wie Elon Musk von Tesla Motors, Bill Gates von Microsoft und Apple-Mitbegründer Steve Wozniak warnen vor Superintelligenz als einer ernsten Gefahr für die Menschheit, vielleicht bedrohlicher als Atombomben. Ist das Alarmismus?

Größter historischer Umbruch seit Jahrzehnten

Fest steht: Die Art, wie wir Wirtschaft und Gesellschaft organisieren, wird sich fundamental ändern. Wir erleben derzeit den größten historischen Umbruch seit dem Ende des Zweiten Weltkriegs: Auf die Automatisierung der Produktion und die Erfindung selbstfahrender Fahrzeuge folgt nun die Automatisierung der Gesellschaft. Damit steht die Menschheit an einem Scheideweg, bei dem sich große Chancen abzeichnen, aber auch beträchtliche Risiken. Treffen wir jetzt die falschen Entscheidungen, könnte das unsere größten gesellschaftlichen Errungenschaften bedrohen.

In den 1940er Jahren begründete der amerikanische Mathematiker Norbert Wiener (1894-1964) die Kybernetik. Ihm zufolge lässt sich das Verhalten von Systemen mittels geeigneter Rückkopplungen (Feedbacks) kontrollieren. Schon früh schwebte manchen Forschern eine Steuerung von Wirtschaft und Gesellschaft nach diesen Grundsätzen vor, aber lange fehlte die nötige Technik dazu.

Heute gilt Singapur als Musterbeispiel einer datengesteuerten Gesellschaft. Was als Terrorismusabwehrprogramm anfing, beeinflusst nun auch die Wirtschafts- und Einwanderungspolitik, den Immobilienmarkt und die Lehrpläne für Schulen. China ist auf einem ähnlichen Weg (siehe Kasten am Ende des Textes). Kürzlich lud Baidu, das chinesische Äquivalent von Google, das Militär dazu ein, sich am China-Brain-Projekt zu beteiligen. Dabei lässt man so genannte Deep-Learning-Algorithmen über die Suchmaschinendaten laufen, die sie dann intelligent auswerten. Darüber hinaus ist aber offenbar auch eine Gesellschaftssteuerung geplant. Jeder chinesische Bürger soll laut aktuellen Berichten ein Punktekonto („Citizen Score“) bekommen, das darüber entscheiden soll, zu welchen Konditionen er einen Kredit bekommt und ob er einen bestimmten Beruf ausüben oder nach Europa reisen darf. In diese Gesinnungsüberwachung ginge zudem das Surfverhalten des Einzelnen im Internet ein – und das der sozialen Kontakte, die man unterhält (siehe „Blick nach China“).

Bild vergrößernBruno Frey